On the Facebook 30-Day Ban and Censorship

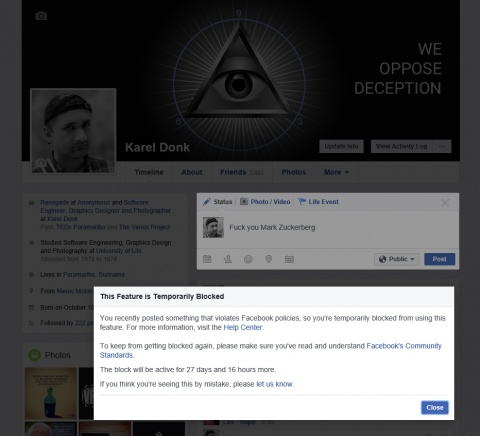

This week I got temporarily banned, again, for 30 days on Facebook after someone apparently reported a comment I had published below a friend’s post. Much has been said about this ‘feature’ on Facebook, but I have more to add to the ongoing discussion on the Internet.

The product team and engineers at Facebook seriously need to look into this functionality because it’s being abused — or used, depending on your perspective — to harass, silence and censor people, and is a serious violation of every individual’s natural right to free speech.

I don’t oppose the idea of having functionality on any social network that enables users to report certain content or notify companies of potential abuse. But I think that in such cases there should be a clear indication of malicious intent by the person who posted the content. In the absence of such clear indications of malicious intent, I don’t think that you can speak of abuse. In other words, there should be a clear distinction between real (objective) abuse (for example, any kind of aggression against someone) and perceived (subjective) abuse (for example, people reporting a comment simply because the truth hurts their feelings).

Right now anyone who takes offence at anything posted by someone else can report that content, and the poster can potentially get blocked or banned by Facebook. The problem is that this content might be offensive to that specific person only, and this is never a good enough reason to completely censor someone else. It may even be the case that the person may simply not like the poster and just wants to cause them some inconvenience by reporting their content.

This photo that I posted in a comment got reported on Facebook, resulting in a 30-day ban on my account

In my case, all I had posted in my comment on Facebook was a collage of photos of Hitler where he’s laughing. It was used instead of a laughing emoticon and meant in good fun. I have several of such memes, including versions featuring Vladimir Putin and Barack Obama. I would love to receive an official explanation from Facebook, explaining to me exactly how this photo violates their precious ‘community standards.’ It’s difficult to imagine who might want to report such a picture, but unfortunately people with a sufficiently low quality of consciousness to find this offensive do exist, so it remains a possibility.

Another possibility is that people can gang up and bully other people that they don’t like. One way of doing that on social media is by teaming up (or using many fake accounts) and sending in a number of abuse-reports on a specific post by the victim, potentially resulting in the victim being blocked or banned. When you have a reporting system that’s poorly designed this kind of thing is easy to do, especially when you also guarantee the anonymity of the bullies — like Facebook does — so that they can hide from their victims. I know for a fact that this happened to me before in another case (also resulting in a 30-day block on Facebook), and it wouldn’t surprise me if that was the case again this time, seeing as how the reported content — a picture of Hitler laughing — was innocent.

The above described bullying-tactic is also used to silence and censor people on Facebook. For example, this happened quite often a few months ago at the beginning of the economic crisis in Suriname, where pro-government people where mass-reporting accounts of anti-government people on Facebook. I know a couple of people who had their accounts or their posted content reported and got temporarily blocked by Facebook — their voices and opinions having effectively been censored. Rumors had it that certain political parties had teams of people who were specifically tasked with reporting the content posted by dissenters on Facebook, trying to get as many of them as possible blocked.

A post on Science Blogs describes another example where this happened:

So let’s recap what we have here. We have a group of rabid antivaccine activists intentionally going through Facebook with a fine tooth comb to locate anything that they think they can report to Facebook that might get a temporary ban, and then they report it. It doesn’t matter how tenuous that “dirt” is. We have service (Facebook) with a system for dealing with hate speech and online harassment that is easily gamed to harass people, an observation that is ironic in the extreme, so much so that it would be amusing if it weren’t so destructive. Facebook’s reporting algorithm is now a tool of harassment, such that it can be used again and again to keep pro-science advocates banned and continually on their guard. Finally, Facebook’s double standard is so incredible that many complaints about things that should be complained about and should result in a ban result in no action. I personally have complained about harassing posts about myself and others, and each and every time I’ve received a notice that the post “does not violate Facebook community standards.” I’ve heard similar stories from several others.

These kinds of things can only be possible because of the poorly designed functionality by Facebook. In the process of designing and building the reporting functionality and processes, the designers and engineers at Facebook didn’t (sufficiently) consider the ironic possibility of that functionality itself being abused to cause inconvenience to others. As a writer on ZDNet also concluded:

Users have been abusing reporting systems since they first appeared on the Internet, and likely even long before then. Off the top of my head, the best Facebook example for this is the brouhaha caused by the company banning users posting breastfeeding photos. Users who found mothers posting this content offensive would report them, rather than just unfriending them or ignoring them.

This is a very common practice, especially in political circles. If someone doesn’t like you, or content you post, they report you for spam or some other reason, tell their friends to do the same, and the automatic systems take your content down, ban you, and so on. […]

…

The worrying trend here is that Facebook continues to add features like this without giving users an option to fight back. Whoever writes these error messages (the one Scoble got, the one Remas got, or the countless others that have yet to come to my attention) doesn’t seem to realize they can be received unjustly, or if they do, they don’t realize that users should be able to dispute them. There needs to be a due process.

I’m not sure how much of this functionality is automated by Facebook through software algorithms, but what I do know is that Facebook has outsourced at least some of the task of reviewing incoming abuse-reports to other companies. These companies in turn hire young people in ‘third world’ countries (i.e. ‘cheap labor’) to go through all of the reported content and decide what actions should be taken:

For most of us, our experience on Facebook is a benign – even banal – one. […] And while most are harmless, it has recently come to light that the site is brimming with paedophilia, pornography, racism and violence – all moderated by outsourced, poorly vetted workers in third world countries paid just $1 an hour.

In addition to the questionable morality of a company that is about to create 1,000 millionaires when it floats paying such paltry sums, there are significant privacy concerns for the rest of us. Although this invisible army of moderators receive basic training, they work from home, do not appear to undergo criminal checks, and have worrying access to users’ personal details. […]

Last month, 21-year-old Amine Derkaoui gave an interview to Gawker, an American media outlet. Derkaoui had spent three weeks working in Morocco for oDesk, one of the outsourcing companies used by Facebook. His job, for which he claimed he was paid around $1 an hour, involved moderating photos and posts flagged as unsuitable by other users.

“It must be the worst salary paid by Facebook,” he told The Daily Telegraph this week.

Once you understand who does the moderation on Facebook and for what salaries, it becomes easier to understand how this system can easily and repeatedly be abused and why a simple picture of Hitler laughing can cause a 30-day ban. If you do a search on Google, you’ll find many cases where people were blocked or banned for no good reason, some of them dating as far back as 2011. Even posting nothing at all can get you banned for 30 days. It’s incredible that Facebook apparently still hasn’t fixed their broken reporting system after so many years.

Could it be possible that they simply don’t want to?

Maybe blocking and banning people for no good reason might be a feature instead of a bug. Why else would you decide to block or ban someone simply for expressing their opinions? If other people take offense at the information that’s shared by someone, they always have the options of unfriending, unfollowing, or personally blocking that individual. Surely that solves their problem, right? But why completely censor, ban or block that individual from the network?

The only valid censorship is the right of people not to listen. Tommy Smothers

Perhaps this article on Films for Action has the answer:

Unfortunately, activists who once found that Facebook helped make organizing easier are now encountering obstacles – and the resistance is coming from Facebook itself. With little explanation, Facebook has been disabling pages related to activism. In some cases, administrators who set up the pages are no longer able to add updates. In others, the pages are being deleted entirely. Understandably, activists are frustrated when a network of 10,000 like-minded individuals is suddenly erased, leaving no way to reconnect with the group.

Realistically, that’s the downside of relying on a hundred billion dollar company. Facebook is a pro-business enterprise with countless partnerships that undoubtedly pressure the site to limit the types of socializing progressives may engage in, particularly activities that might harm advertisers’ profits.

Another article on Breitbart mentions that “Facebook has become the world’s most dangerous censor,” and goes on to discuss some examples of how governments are abusing the social network to control people:

We all saw this coming, and we also knew that Merkel has been personally pushing for more censorship on Facebook. In September, the Chancellor was caught on tape pressuring CEO Mark Zuckerberg to work to counter “racism” on the social network. German prosecutors have also been investigating Facebook for “not doing enough” to counter hate speech. The pressure paid off, and now Sheryl Sandberg herself has flown to Germany to declare that hate speech “has no place in our society.”

Everyone suspects, of course, that “hate speech” is little more than a clumsily-disguised codeword for European anger at the atrocities committed by refugees.

So it’s not just businesses that can ‘pressure’ Facebook into censorship, but also governments and politicians. In this case it appears to be all about controlling thought and behavior.

Given the fact that Fuckerberg has demonstrated in the past to be a fucking hypocrite, and to value money and profits more than doing what’s right, it’s no surprise to me that he would allow himself to get ‘pressured’ by businesses and governments. What else can we expect from a man who thinks that his users are ‘dumb fucks’?

I have to admit that when it comes to ‘dumb fucks’ Fuckerberg might have a point, seeing as how some of his users are stupid enough to report a photo of Hitler laughing, his moderators also being stupid enough to actually take action based on that, or his engineers being stupid enough to design an algorithm that dishes out 30-day bans for posting photos of Hitler laughing. ‘Dumb fucks’ indeed.

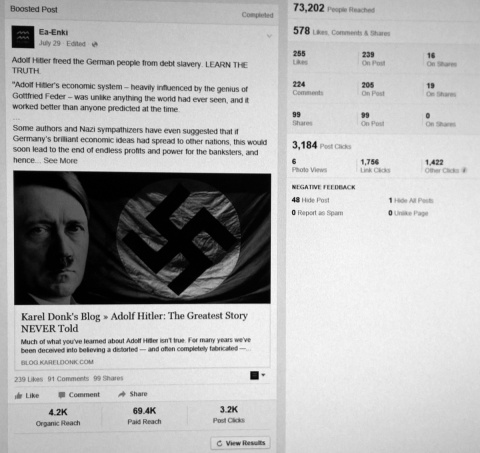

These people appear to be even bigger ‘dumb fucks’ when you consider that months ago during my personal activism efforts, I had advertised a blog post of mine about Hitler for weeks on Facebook — and this was allowed and acceptable. You can see from the statistics that some people had negative feedback on it, but it was allowed to continue to run, probably because in this case I was paying for it. But a picture of Hitler laughing? That’s apparently way too inappropriate for Facebook, especially when you’re not paying.

In this screenshot of statistics on Facebook, you can see one of several ads for my blog post on Hitler. This ad was allowed to run for a couple of weeks on Facebook.

This is another clear example of the double standards at Facebook, resulting from a broken and poorly designed product, fucking stupid internal policies and bad corporate ethics (which includes other examples, such as introducing ‘improved’ algorithms that lower organic reach to force people to advertise more).

I agree with the writer at Films for Action that it’s naïve to think that corporations such as Facebook are going to prioritize our universal individual rights instead of their bottom lines. This is why 10 years ago I had already mentioned that these kinds of centralized services are inherently bad for individuals in society, especially in the long term:

I’ve seen the future of the Internet, and it does not involve centralized services like Live.com and Google.

Why not? One of the biggest and most important reasons for this is that these kinds of huge centralized services take control away from the user. Control over various things, such as property, content and privacy, just to name a few. It’s been proven again and again that users cannot possibly trust organizations offering such centralized services with their information.

The time for a truly peer to peer (P2P) social networking platform is getting closer. Make your time, Fuckerberg, make your time.

Comments

There are 50 responses. Follow any responses to this post through its comments RSS feed. You can leave a response, or trackback from your own site.